LLaMA 2: Meta introduces new AI language model

Publié le 19 juillet 2023 | 2 mins, 302 mots | Par Orimédias

The US technology company Meta has presented a new version of its AI language model. LLaMA 2 is open source and has approximately the performance of GPT-3.5.

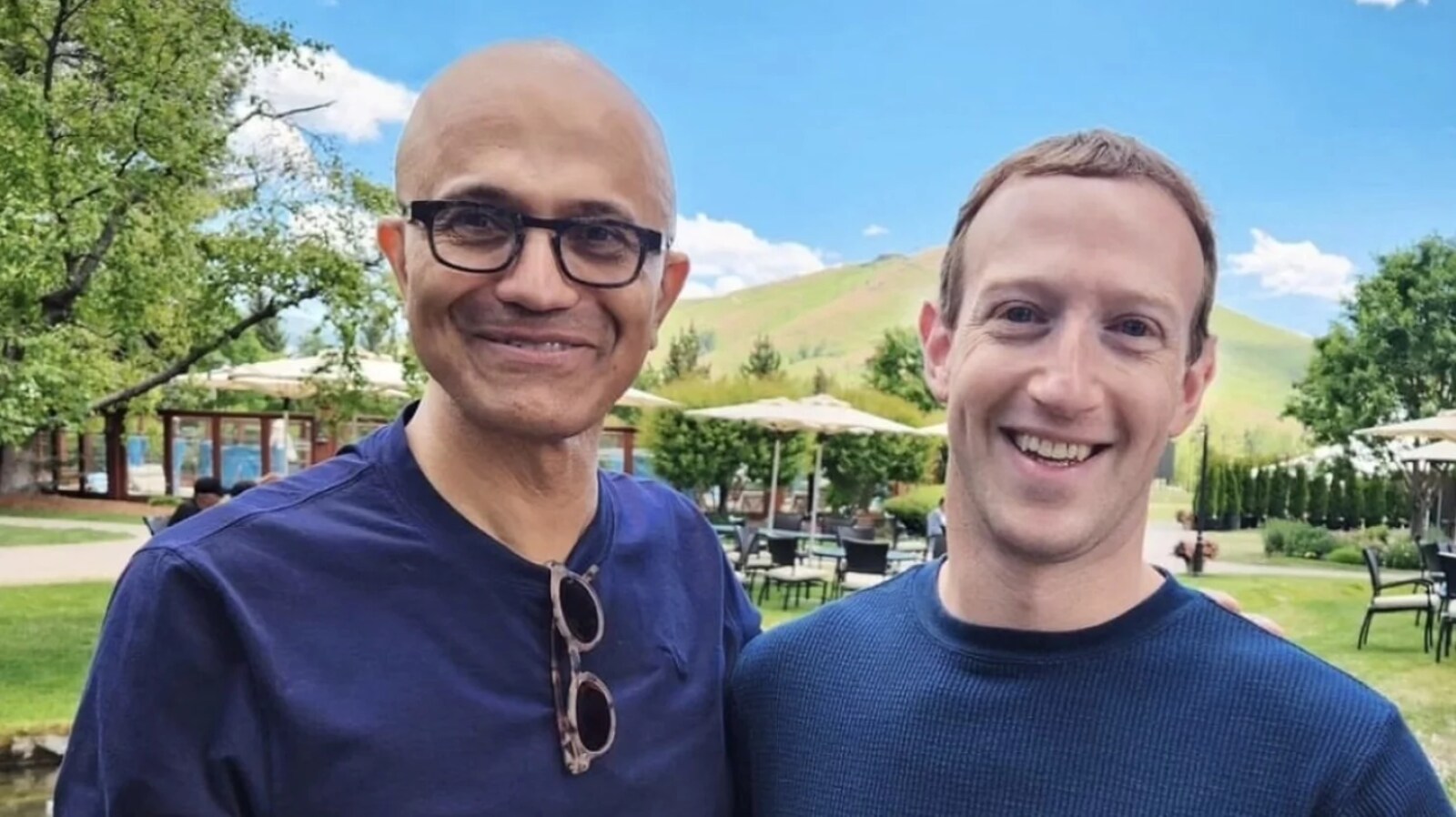

Together with Microsoft, Meta has presented LLaMA 2. The second version of the generative language model is open source; according to "Financial Times", Meta is planning a commercial version with a paid licence. This means that LLaMA 2 could soon find its way into software products. The cooperation with Microsoft relates on the one hand to distribution via Azure and on the other hand to optimisation for local operation of LLaMA 2 under Windows.

According to Meta's own information, the artificial intelligence (AI) is significantly more powerful than in the first version. In benchmarks, it is about on par with OpenAI's GPT-3.5, but far behind the new GPT-4. For more details, see Meta's research report.

LLaMA 2 comes in three different versions: with seven billion, 13 billion or 70 billion training parameters. It is said to be particularly suitable as a tool for programming. The language model will "change the landscape of the LLM market", writes Meta's AI chief Yann LeCun on Twitter. It is available on Microsoft Azure and Amazon Web Services (AWS), among other platforms. Access can be requested via this form.

With its open source model, Meta is swimming against the tide of the other big AI suppliers like OpenAI and Google. The advantage of this is that companies can further develop LLaMA 2 themselves. However, this raises security concerns. The AI could be misused for dubious purposes, such as disinformation or spam.

What Meta does not reveal is where the training data for LLaMA 2 comes from. This is also a source of criticism, because such data often comes from the net without authorisation. In Meta's case, it could also come from the social networks Facebook and Instagram's own databases.

Articles récents

- Pierre Leduc, Chef de projet véhicules électrifiés et piles à combustible à l’IFP Énergies nouvelles : « La mobilité à l’hydrogène commence à être une réalité »

- Global Citizen Announces 'Power Our Planet: Live in Paris' with Lenny Kravitz, Billie Eilish, H.E.R., and Jon Batiste!

- Eau potable : 3 startups israéliennes vont déployer leur technologie en Afrique

- Africa Global Logistics (AGL), nouvel opérateur du terminal à conteneurs de Malindi à Zanzibar

- Création de la zone de libre-échange continentale africaine (AFCFTA) : un projet phare de l'agenda 2063 de l'Union Africaine